Amiga OCS vs Amiga AGA (020)

category: general [glöplog]

I use Photoshop for stills but for palette generation it's rubbish. I'd recommend pngquant which produces much better results. We also use Blender for 3D.

pngquant looks really good! It also got a library which may be useful.

I'm writing my own color reduction routine that incorporates that N color changes can be made per line using the copper. Currently it can just reduce colors.

What I do is:

1 - Collect all unique colors of an image in an array

2 - Check which two are most similar

3 - Merge them together.

4 - Goto 1 until there are the wanted number of colors are present in the image

5 - Remap image to the new unique colors

:D

By removing a few low bits of the color values first it can speedup a lot.

By allowing N color changes per line I plan to do the above and then for each line switch the 6 colors which have the worst error per line.

Hoping I will not encounter horizontal stripe artefacts.

I'm writing my own color reduction routine that incorporates that N color changes can be made per line using the copper. Currently it can just reduce colors.

What I do is:

1 - Collect all unique colors of an image in an array

2 - Check which two are most similar

3 - Merge them together.

4 - Goto 1 until there are the wanted number of colors are present in the image

5 - Remap image to the new unique colors

:D

By removing a few low bits of the color values first it can speedup a lot.

By allowing N color changes per line I plan to do the above and then for each line switch the 6 colors which have the worst error per line.

Hoping I will not encounter horizontal stripe artefacts.

I have only been lurking on Pouet over the last 7 years or so, but now I am no longer lurking I would just like to say that Loaderror: you rock. It's always nice to see some practical commentary among all the noise.

And just so I am not guilty of doing the very thing that might annoy me I might just like to add some ascii data from a tutorial I am writing for a magazine about AHX/HVL

Square Power Chord

I will be checking to see if anyone has seen Dave (Ript) but I honestly have no interest in participating on the BBS further, as you were :) dodke, I don't suppose you have heard from him. There is another thread where I might like to be notified, because I know that you know my mate personally I thought that maybe you had not seen that he is unreachable.

And just so I am not guilty of doing the very thing that might annoy me I might just like to add some ascii data from a tutorial I am writing for a magazine about AHX/HVL

Square Power Chord

Quote:

0 F#4*4 320 020 <Set 3xx & 0xx>

1 C-3 3 234 411 <LFO and "Thump">

======

2 C-2 0 F02 000 ROOT <Leave yourself some room to move>

3 G-2 0 000 000 7TH <7th>

4 C-3 0 502 000 +OCT <Loop>

I will be checking to see if anyone has seen Dave (Ript) but I honestly have no interest in participating on the BBS further, as you were :) dodke, I don't suppose you have heard from him. There is another thread where I might like to be notified, because I know that you know my mate personally I thought that maybe you had not seen that he is unreachable.

The bit field instructions were useful for cpu rendering directly to chip.

Dual OCS/AGA demos with beefed up effects for when running on AGA? any been done? I seem to remember Sanity Roots perhaps had stuff that was not shown when running on OCS

But would be cool to have a demo that does the same effects on both, just with better higher quality on the AGA machines

But would be cool to have a demo that does the same effects on both, just with better higher quality on the AGA machines

How to skin a cat had some beefed up effects. You can check the linked video for direct comparision.

@loaderror, thats quite a naive sounding approach for color quantization, how does it perform? ?

@oswald : the performance is awful. If quantizing the number of bits per color then the conversion takes several seconds. If not quantizing we're talking a minute or so per picture IIRC. It depends on how many unique colors there are in the image.

This is the absolute simplest thing that can work though.

I first used it to reduce a normal map image of 640x256 into an image where every scanline has ~12 unique AGA colors representing the normals. Then I use these normals to compute a simple directional light per scanline and set the colors using the copper every scanline. The result is a directional light bump which is quite fast because the resolution to shade is effectively 12 x 256 normals.

Problem is that it didn't look any good because of discontinuities between two lines where the colors got reduced differently. If increasing to ~64 colors changed per line it looked seamless though.. Too bad the copper is not fast enough to change that many AGA colors per line.

Then I used it to generate a normal map + ambient occlusion map reduced to just 256 unique normals for the whole image. Then just shade the palette. This looked ok and is now in the Neonsky demo (mandelbulb image).

I now want to revisit the effect with a starting base palette of 256 now and additionally 12 possible color changes per line. This way I can per line select a new color or from the 256 already set. Whatever generates the least error.

This is the absolute simplest thing that can work though.

I first used it to reduce a normal map image of 640x256 into an image where every scanline has ~12 unique AGA colors representing the normals. Then I use these normals to compute a simple directional light per scanline and set the colors using the copper every scanline. The result is a directional light bump which is quite fast because the resolution to shade is effectively 12 x 256 normals.

Problem is that it didn't look any good because of discontinuities between two lines where the colors got reduced differently. If increasing to ~64 colors changed per line it looked seamless though.. Too bad the copper is not fast enough to change that many AGA colors per line.

Then I used it to generate a normal map + ambient occlusion map reduced to just 256 unique normals for the whole image. Then just shade the palette. This looked ok and is now in the Neonsky demo (mandelbulb image).

I now want to revisit the effect with a starting base palette of 256 now and additionally 12 possible color changes per line. This way I can per line select a new color or from the 256 already set. Whatever generates the least error.

I mean how good it quantizes colors, not how fast :) median cut sounds better, tho no idea how to do that efficiently wit hundredthousands of colors.

the need to restrict colors per line, and also not to have horizontal banding is quite specific tho.

the need to restrict colors per line, and also not to have horizontal banding is quite specific tho.

@loaderror. Your method is pretty similar concept to LBG (except the collation and clustering) What you can try is..

1. Select x amount of colors at random

2. look through each pixel color in the image and map these to the nearest set of random selected colors (do not merge yet)

3. one the whole image pixels are read, then merge each set of color entries (which contain the closest matches) and then repeat step 2 and 3 a few times

You may have some orphan entries, this can be further refined and then the clustering repeated a few times.

To reduce banding, you would somewhat need to analyse the current line and to change the least occurring color entry(s) instead (or those that are not consecutive color pixels)

1. Select x amount of colors at random

2. look through each pixel color in the image and map these to the nearest set of random selected colors (do not merge yet)

3. one the whole image pixels are read, then merge each set of color entries (which contain the closest matches) and then repeat step 2 and 3 a few times

You may have some orphan entries, this can be further refined and then the clustering repeated a few times.

To reduce banding, you would somewhat need to analyse the current line and to change the least occurring color entry(s) instead (or those that are not consecutive color pixels)

Fast & furious: Build a random palette from [n] randomly chosen pixels for [n] colors every frame; mod [n] every (linear addressed) pixel into your palette, voilà. Hefty noise and horrid pseudo quantization rolled into one. ;)

(oh, and because no-one mentioned it so far: The "usual" method is to divide and multiplicate by the same value. The integer division reduces precision due to rounding errors; the multiplication gets it "back up" with the rounding error blown up to "not really, but hey" sorts of quantization)

(oh, and because no-one mentioned it so far: The "usual" method is to divide and multiplicate by the same value. The integer division reduces precision due to rounding errors; the multiplication gets it "back up" with the rounding error blown up to "not really, but hey" sorts of quantization)

here is an example of a 4-5 second conversion. first 4 bits get chopped off and random dither applied then the remaining colors are reduced like above.

Original:

Reduced to 256 color (4 bits cut off each channel)

Same reduction using Gimp and Floyd Steinberg dithering

Clearly the method in the middle adds a more manly mustache while Gimp's is merely pre-pubertal. Who would the female normal map faces like to take to prom eh??

Original:

Reduced to 256 color (4 bits cut off each channel)

Same reduction using Gimp and Floyd Steinberg dithering

Clearly the method in the middle adds a more manly mustache while Gimp's is merely pre-pubertal. Who would the female normal map faces like to take to prom eh??

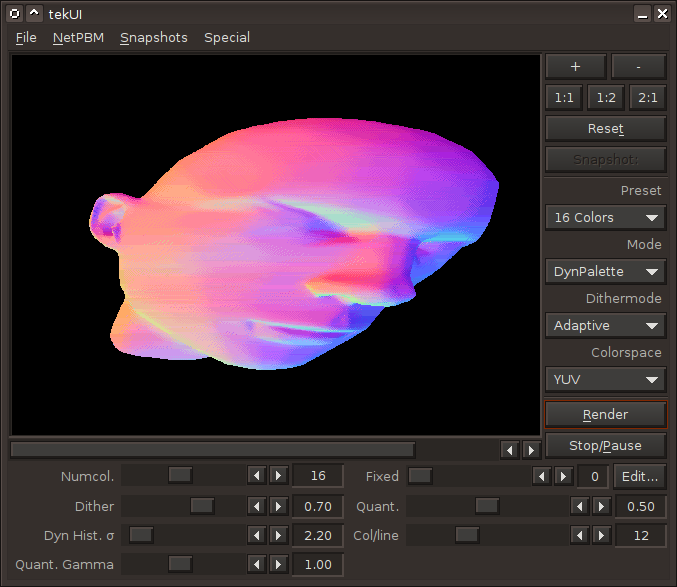

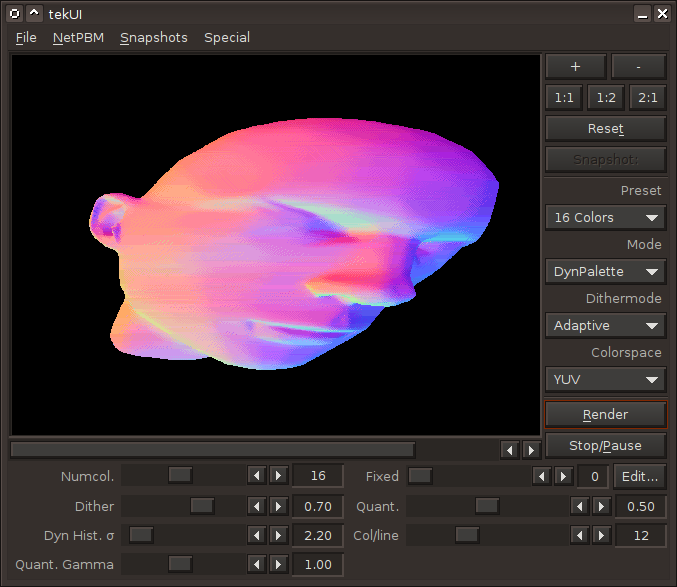

Here is what it looks like if I reduce to N colors per line.

12 AGA colors - heavy artefacts

64 AGA colors - not too bad

12 AGA colors - heavy artefacts

64 AGA colors - not too bad

OCS dynhires 12 colors per line with dithering:

@bifat Looks really great! And it is just OCS colors. It says tekUI so this is your own tool. Did you use it any demo yet?

Do you do anything to avoid discontinuities between two consecutive horizontal lines or does it just get remedied by good dither settings?

I should consider dropping resolution to lowres and OCS colors.

Do you do anything to avoid discontinuities between two consecutive horizontal lines or does it just get remedied by good dither settings?

I should consider dropping resolution to lowres and OCS colors.

rloaderro: yes, here: http://www.pouet.net/prod.php?which=68255 and to a lesser extent here: http://www.pouet.net/prod.php?which=68903. In the first thread I wrote something about how the converter works. For the slideshow I twiddled with the sliders for hours to get the best results out of it, this here worked quite well with the default settings.

Nicely off topic with this colour quantizing discussion.. well done :D

I missed Elevation totally first time. Looks really good! Hope to see more of this in upcoming demos.

Now back on track.. AGA vs OCS. Discuss!

Now back on track.. AGA vs OCS. Discuss!

Quote:

Nicely off topic with this colour quantizing discussion.. well done :D

Isn't "effects enabled by AGA which don't need a fast CPU" exactly what this thread is about? :)

Amiga - where colorcycling still counts as effects. \:D/

Quote:

Isn't "effects enabled by AGA which don't need a fast CPU" exactly what this thread is about? :)

I guess.. but the colour reduction discussion is worthy of it's own thread :)

Quote:

Amiga - where colorcycling still counts as effects. \:D/

If it looks cool?... why not :D I did some colour cycling in the intro for Chiperia 6, and it gave a great effect ;)

@Dan: I definitely didn't mean that it shouldn't be done. ;)

there are no rules :D and the "cheaper" the effect (cycle-wise).. the better...

If you wanna see how good colour cycling can be, check out britelites entry from datastorm 2014 called cracktro

I want to see it. Give us a link, please!