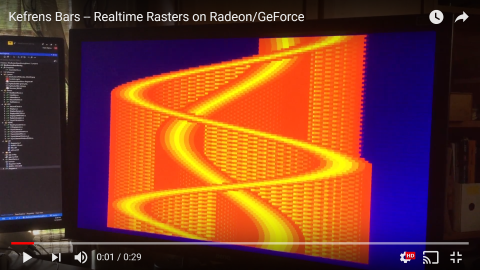

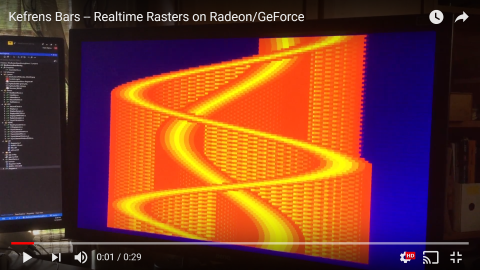

True rasterdemo ("Raster Interrupts"-like) working on GeForce/Radeon! -- Comments?

category: general [glöplog]

For kicks, I made an animated GIF of the Kefrens Bars glitching.

That occurs when I start launching a Windows application in the background, which screws around the raster timings.

Each Kefrens Bars row is generated in raster real-time. 8000 tearlines per second. One pixel row per framebuffer. Each Kefrens Bar pixel row is a separate framebuffer generated in raster real time.

All crammed through 8000 Presents() per second (Direct3D) or 8000 glutSwapBuffers() per second (OpenGL) -- MonoGame supports both Direct3D and OpenGL. Looks exactly identical. It works with any tearline-supported API!

Raster timing errors simply vertically stretches a "Kefrens Bars pixel row" due to the tearlines jittering all over the place -- so it's quite timings-forgiving. The timings forgivingness made it possible to do in a practically Nerf(tm) scripting language (C# + MonoGame) rather than assembly -- and crossplatform!

I don't think anyone has ever done true real-raster pixel row cramming via overkill VSYNC OFF frameslicing. It's API independent and platform independent, as every tearline ever generated in humankind history is just simply rasters, lying in wait -- and clocks are precise enough nowadays to take advantage of them.

That occurs when I start launching a Windows application in the background, which screws around the raster timings.

Each Kefrens Bars row is generated in raster real-time. 8000 tearlines per second. One pixel row per framebuffer. Each Kefrens Bar pixel row is a separate framebuffer generated in raster real time.

All crammed through 8000 Presents() per second (Direct3D) or 8000 glutSwapBuffers() per second (OpenGL) -- MonoGame supports both Direct3D and OpenGL. Looks exactly identical. It works with any tearline-supported API!

Raster timing errors simply vertically stretches a "Kefrens Bars pixel row" due to the tearlines jittering all over the place -- so it's quite timings-forgiving. The timings forgivingness made it possible to do in a practically Nerf(tm) scripting language (C# + MonoGame) rather than assembly -- and crossplatform!

I don't think anyone has ever done true real-raster pixel row cramming via overkill VSYNC OFF frameslicing. It's API independent and platform independent, as every tearline ever generated in humankind history is just simply rasters, lying in wait -- and clocks are precise enough nowadays to take advantage of them.

In reality, I can simply use multiple pixel rows per Kefrens Bars "pixel row".

Such as fancy sprites or 3D graphics per Kefrens Bars row. Maybe colored tiny teapots per Kefrens Bars. Or ice cubes instead of pixels.

I need to brainstorm how to raise this to a better Hybrid since this demoscene submission doesn't qualify under "retro programming" rules since it requires a fairly powerful GPU (recent Radeon or GeForce) for the overkill frameslice rate necessary for realtime raster "pixel rows".

So it has to be submitted under rules normally reserved for ultra-fancy-graphics demos (e.g. realtime wild rules of Assembly 2018).

Which may not get enough votes to be displayed on the "big screen".

Any ideas? Help? How do I improve this so it gets displayed on the "big screen" at Assembly 2018?

Such as fancy sprites or 3D graphics per Kefrens Bars row. Maybe colored tiny teapots per Kefrens Bars. Or ice cubes instead of pixels.

I need to brainstorm how to raise this to a better Hybrid since this demoscene submission doesn't qualify under "retro programming" rules since it requires a fairly powerful GPU (recent Radeon or GeForce) for the overkill frameslice rate necessary for realtime raster "pixel rows".

So it has to be submitted under rules normally reserved for ultra-fancy-graphics demos (e.g. realtime wild rules of Assembly 2018).

Which may not get enough votes to be displayed on the "big screen".

Any ideas? Help? How do I improve this so it gets displayed on the "big screen" at Assembly 2018?

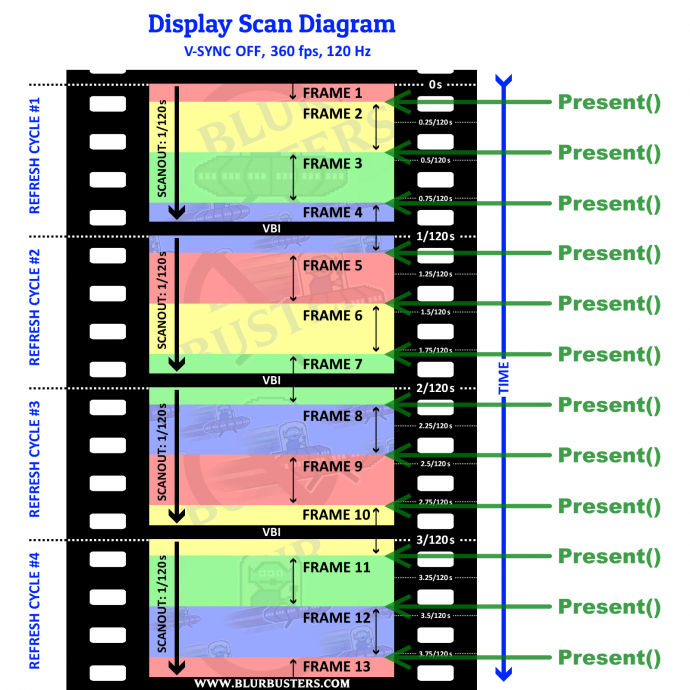

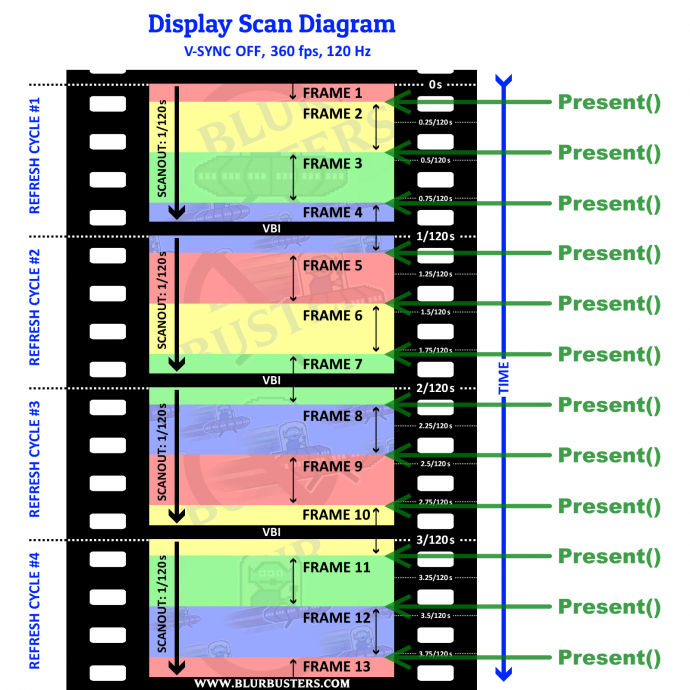

Someone asked about a diagram to understand better, so.

It's surprisingly simple. After blackboxing all the raster calculations and VSYNC timestamp monitoring, the Kefrens Bars module is only 92 lines of C# code -- the one that generated the above youtube video.

For those who want to understand how the bleep it works, tearlines are simply precision-timed frame buffer presentations. Accurate time-offsets from blanking intervals.

Works on any display, analog, digital, projector, smartphone, laptop LCD, tablet, at any refresh rate, 24Hz, 30Hz, 60Hz, 75Hz, 100Hz, 120Hz, 144Hz, 240Hz, GSYNC, FreeSync (some advanced explanations are needed to explain the hybrid GSYNC + VSYNC OFF mode -- but the concept is to manually trigger the refresh cycle first, then beam-race new tearlines into that particular refresh cycle). Less than 1% of screens will scan sideways or upsidedown (e.g. HTC OnePlus 5) so you have to adjust beamracing. But every GPU ever made for a computer always output top-to-bottom in default screen orientation (landscape displays).

VSYNC OFF Tearlines are simply rasters. Tearlines chops up the framebuffer in a raster style fashion as a time-based offset from VBI.

You can simply use RTDSC instruction or things like std::chrono::high_resolution_clock::now() to guess the raster scan line number as a time-based offset from VBI (VSYNC time stamps -- the timestamps of the beginning of a refresh cycle). No raster register is needed to guess the scan line number to a relative accuracy. And scanline offset is only 1-2% if not knowing horizontal scanrate (VBI size) and scanline offset becomes more raster exact at 0.1% if you know horizontal scanrate in number of scanlines (pixel rows output of GPU output per second).

VSYNC OFF simply interrupts the scanout with a new frame. This concept is simply piggybacked as rasters. VSYNC OFF tearlines are simply rasters. Every tearline created in humankind are just rasters.

It's surprisingly simple. After blackboxing all the raster calculations and VSYNC timestamp monitoring, the Kefrens Bars module is only 92 lines of C# code -- the one that generated the above youtube video.

For those who want to understand how the bleep it works, tearlines are simply precision-timed frame buffer presentations. Accurate time-offsets from blanking intervals.

Works on any display, analog, digital, projector, smartphone, laptop LCD, tablet, at any refresh rate, 24Hz, 30Hz, 60Hz, 75Hz, 100Hz, 120Hz, 144Hz, 240Hz, GSYNC, FreeSync (some advanced explanations are needed to explain the hybrid GSYNC + VSYNC OFF mode -- but the concept is to manually trigger the refresh cycle first, then beam-race new tearlines into that particular refresh cycle). Less than 1% of screens will scan sideways or upsidedown (e.g. HTC OnePlus 5) so you have to adjust beamracing. But every GPU ever made for a computer always output top-to-bottom in default screen orientation (landscape displays).

VSYNC OFF Tearlines are simply rasters. Tearlines chops up the framebuffer in a raster style fashion as a time-based offset from VBI.

You can simply use RTDSC instruction or things like std::chrono::high_resolution_clock::now() to guess the raster scan line number as a time-based offset from VBI (VSYNC time stamps -- the timestamps of the beginning of a refresh cycle). No raster register is needed to guess the scan line number to a relative accuracy. And scanline offset is only 1-2% if not knowing horizontal scanrate (VBI size) and scanline offset becomes more raster exact at 0.1% if you know horizontal scanrate in number of scanlines (pixel rows output of GPU output per second).

VSYNC OFF simply interrupts the scanout with a new frame. This concept is simply piggybacked as rasters. VSYNC OFF tearlines are simply rasters. Every tearline created in humankind are just rasters.

For improved raster precision you do need to pre-Flush() before your precision busywait loop (or nanosleep for CPUs that supports true even-driven nanosleeps) before your raster-exact-timed Direct3D Present() or OpenGL glutSwapBuffers().

That places your tearline practically exactly where you want it.

I simply use microsecond-accurate busywait loops since that's guaranteed on all platforms, since not all platforms support power-efficient nanosleeping, but there are many tricks to get the precision needed.

As you can see in the videos, the raster precision necessarily is definitely there. It has already been for the last eight to ten years but few understood all of the concepts simultaneously well enough until recently, and now this lag-reducing trick is being used for VR / emulators / etc.

That places your tearline practically exactly where you want it.

I simply use microsecond-accurate busywait loops since that's guaranteed on all platforms, since not all platforms support power-efficient nanosleeping, but there are many tricks to get the precision needed.

As you can see in the videos, the raster precision necessarily is definitely there. It has already been for the last eight to ten years but few understood all of the concepts simultaneously well enough until recently, and now this lag-reducing trick is being used for VR / emulators / etc.

Ooops, one minor wording error: I need to clarify something first. When I said "Less than 1% of screens will scan sideways or upsidedown (e.g. HTC OnePlus 5) " I also meant the cable signal too.

Other screens have internal scan-conversion (e.g. plasma, DLP, etc) that converts refresh rates and scan direction. But all GPUs have the pixels top-to-bottom on the video output and cable. Cable scan direction and display scan direction can be different. But that doesn't matter for us. We just have to honor pre-existing scan direction on the cable, and beam race that. The display will simply buffer the pixels in the correct beamraced order and preserve all the top-to-bottom beamraced tearlines, even if the display internally scan-converts (e.g. refresh rate conversion in a plasma display, or scan-direction conversion in a DLP display).

What the display does next doesn't really matter, as long as it's within the expected parameters -- VSYNC OFF tearlines doesn't affect the video signal structure. Presenting a new framebuffer during VSYNC OFF simply splices a new frame mid-scanout, that's all -- and that's why tearing artifacts are always raster-positioned, every time tearing is generated in humankind.

eSports players ($1B industry) prefers VSYNC OFF because of lowest input lag. Players tolerate tearing to get the lowest latencies. Tearing is normally a very annoying artifact or flaw. That said, this 'feature' is commandeered as rasters.

Other screens have internal scan-conversion (e.g. plasma, DLP, etc) that converts refresh rates and scan direction. But all GPUs have the pixels top-to-bottom on the video output and cable. Cable scan direction and display scan direction can be different. But that doesn't matter for us. We just have to honor pre-existing scan direction on the cable, and beam race that. The display will simply buffer the pixels in the correct beamraced order and preserve all the top-to-bottom beamraced tearlines, even if the display internally scan-converts (e.g. refresh rate conversion in a plasma display, or scan-direction conversion in a DLP display).

What the display does next doesn't really matter, as long as it's within the expected parameters -- VSYNC OFF tearlines doesn't affect the video signal structure. Presenting a new framebuffer during VSYNC OFF simply splices a new frame mid-scanout, that's all -- and that's why tearing artifacts are always raster-positioned, every time tearing is generated in humankind.

eSports players ($1B industry) prefers VSYNC OFF because of lowest input lag. Players tolerate tearing to get the lowest latencies. Tearing is normally a very annoying artifact or flaw. That said, this 'feature' is commandeered as rasters.

...So fundamentally, tearing exists because you're replacing a framebuffer mid-raster. That "annoying tearing artifact" you see with "VSYNC OFF" modes (basically unsynchronized framerates) -- the position of those tearline disjoints are the exact raster moment where the frame buffer got replaced while graphics is still mid-output of a refresh cycle. We ignore tearlines, as an ordinary annoying artifact, without scientifically understanding where tearlines comes from. But tearlines are simply where the whole screen framebuffer got replaced while a display cable is still scanning out mid-scanlines. The disjoint occurs right there, right in that spot, raster. That's all tearing simply is -- they're just rasters, unbeknownst to us, lying under our noses.

How does this help if the physical monitor has a different refresh rate than the emulated system and cannot be changed, say, 60 Hz vs 50 Hz? Then you'll still get tearing i.e. raster splits if vsync is off, or jerky motion if vsync is on. For demos, input lag has very little significance.

Btw, I never even heard the term "tearing" before gaming and emulators etc were mainstream. They used to be called raster splits.

Btw, I never even heard the term "tearing" before gaming and emulators etc were mainstream. They used to be called raster splits.

Quote:

They used to be called raster splits.

Not in the C-64 world at least, where raster splits are something completely different :)

On second thought, the splits I was thinking of might actually have been called color splits, so disregard my comment :D

Quote:

How does this help if the physical monitor has a different refresh rate than the emulated system and cannot be changed, say, 60 Hz vs 50 Hz? Then you'll still get tearing i.e. raster splits if vsync is off, or jerky motion if vsync is on. For demos, input lag has very little significance.

The demo will have to speed up and slow down to adapt to the refresh rate. Tearline Jedi does. For example the bounce-motion math includes the refresh rate so the animation stays the same speed.

For emulators, just simply automatically switch to 60 Hertz or use 60fps VRR (VRR frames can be beam raced too!). Or if it's 59 or 61Hz, just slow down or speed things up a smidgen to sync.

WinUAE has adaptive beamrace, so it will speed up/slow down with slight refresh rate divergences, and you can use multiples, like 100 Hz -- it simply beam-races every other refresh cycle. Beamraces can be cherrypicked on a specific-refresh-cycles basis.

....meaning those extra refresh cycles simply behave like long VBIs. You do have to surge-execute 2x emulation velocity to beam-race a 100Hz refresh cycle instead of a 50Hz refresh cycle. But you simply proportionally distort the emulator clock during your surge-execution, and the emulator doesn't know it's surge executing. (As Einstein says, it's all relative -- time-wise).

....er long VBIs (to the emulator which runs "slowly" during a long VBI to keep emulator CPU cycles the same as original VBI). The real world refresh cycles is simply duplicate refresh cycles (no beam racing being done, just repeating the last refresh cycle's completed beam-raced frame.). Yeah, it gets confusing. Just like Einstein. But it works fine (surge-execute during fast refresh cycles, slow-execute during long intervals between fast refresh cycles) for 60fps@120Hz or 180Hz or 240Hz. Or 50fps at 100Hz or 150Hz or 200Hz with WinUAE.

...That technique won't work for Tearline Jedi; since it's not rasterplotting into an emulated refresh cycle. So unlike emulators (which can just repeat-refresh the already-completed emulator refresh cycle, without beam-racing it again because it was an emulated raster and it's already framebuffered) --

Tearline Jedi must beamrace every single refresh cycle to avoid glitches since all the beamrace graphics is instantly gone the next refresh cycle. So must keep repeat-beamracing.

I've now posted a new improved Kefrens Bars video.

Look at how it glitches when I drag the window around.

The glitching is lots of fun - it's an excellent visualization of performance consistency.

At 1080p 60Hz it is about 67KHz horizontal scan rate. At 1080p 144Hz it is about 162KHz horizontal scanrate (162000 pixel rows output of graphics output sequentially per second), a 1/162000sec pause shifts a tearline downwards by 1 pixel. That's the fundamental concept of exactly positioning rasters via precision-timed Present() calls. :D

Tearline Jedi must beamrace every single refresh cycle to avoid glitches since all the beamrace graphics is instantly gone the next refresh cycle. So must keep repeat-beamracing.

I've now posted a new improved Kefrens Bars video.

Look at how it glitches when I drag the window around.

The glitching is lots of fun - it's an excellent visualization of performance consistency.

At 1080p 60Hz it is about 67KHz horizontal scan rate. At 1080p 144Hz it is about 162KHz horizontal scanrate (162000 pixel rows output of graphics output sequentially per second), a 1/162000sec pause shifts a tearline downwards by 1 pixel. That's the fundamental concept of exactly positioning rasters via precision-timed Present() calls. :D

Bumping this -- I'm still recruiting a volunteer to help me fancy this up for submission to Assembly 2018.

maybe try a post at https://wanted.scene.org, its the place for these things.

or drop by on irc and try your luck in direct coversation (#revision/IRCnet is a good start).

or drop by on irc and try your luck in direct coversation (#revision/IRCnet is a good start).

for the lazy http://wanted.scene.org

Hi, I just want to say I find this awesome and inspiring and applaud both the idea and the work involved. Also, what exactly are you looking for in terms of skills, language, type of work ie technical or artistic, and amount of work ie hours, days, weeks?

I'm open to anything really; the important thing is that I need an ally, someone who understand what it takes.

1. I'm a deafie so someone will have to help add sound to the demo. Could be minutes, hours, or days -- maybe just a background playing music file and just-be-done-with-it.

and/or

2. Help bring it to a standard that will bring it to the big screen at say, Assembly 2018 ... Like improve the graphics or make fun of ittself (joking about turning sheer GeForces into mere Atari TIA's -- and that isn't far from the truth at least when it comes to the Kefrens demo as it's single-pixel-row buffered being streamed realtime)

and/or

3. Help storyboard this. Basically the demo sequence. It could introduce the first tearline in a funny way, then explain how we're beam racing the tearline. Then up the ante, all the way to a more fancy version of the Kefrens demo, etc.

I suspect that at the minimum, only a few days work is needed to bring it to demo standards. But it could scale up and down. The intended open source license is Apache 2.0 afterwards.

Thanks for the tip on wanted.scene.org

1. I'm a deafie so someone will have to help add sound to the demo. Could be minutes, hours, or days -- maybe just a background playing music file and just-be-done-with-it.

and/or

2. Help bring it to a standard that will bring it to the big screen at say, Assembly 2018 ... Like improve the graphics or make fun of ittself (joking about turning sheer GeForces into mere Atari TIA's -- and that isn't far from the truth at least when it comes to the Kefrens demo as it's single-pixel-row buffered being streamed realtime)

and/or

3. Help storyboard this. Basically the demo sequence. It could introduce the first tearline in a funny way, then explain how we're beam racing the tearline. Then up the ante, all the way to a more fancy version of the Kefrens demo, etc.

I suspect that at the minimum, only a few days work is needed to bring it to demo standards. But it could scale up and down. The intended open source license is Apache 2.0 afterwards.

Thanks for the tip on wanted.scene.org

Oh and to mention:

I can also be reached at -- mark [at] blurbusters.com

Obviously, would cram the credit text accordingly with appropriate co-credits including all helpers. Already, at least, I want to shoutout to emulator authors who decided to implement variants of these ideas already, as well as also Calamity (GroovyMAME) who co-invented the beamraced frameslicing technique for emulation use.

I can also be reached at -- mark [at] blurbusters.com

Obviously, would cram the credit text accordingly with appropriate co-credits including all helpers. Already, at least, I want to shoutout to emulator authors who decided to implement variants of these ideas already, as well as also Calamity (GroovyMAME) who co-invented the beamraced frameslicing technique for emulation use.

Hi Mark, and thank you for your reply. I'm a coder that never did a demo but I have other priorities at the moment, but I wish you the best for your project!

Heads up to you coders --

Related topic, but there is an 850 dollar open-source bounty (BountySource) for adding beam racing support to the RetroArch emulator -- to synchronize the emulator raster with the realworld raster:

https://github.com/libretro/RetroArch/issues/6984

Related topic, but there is an 850 dollar open-source bounty (BountySource) for adding beam racing support to the RetroArch emulator -- to synchronize the emulator raster with the realworld raster:

https://github.com/libretro/RetroArch/issues/6984

....er, clicky: https://github.com/libretro/RetroArch/issues/6984

Apart from lowering input-to-display latency for hard-core retro gamers, how does this "beam racing" help? If you sync the emulated vertical refresh to the hardware display so that exactly one full emulated frame is displayed on every real displayed frame and the time proceeds at non-jittering intervals, then you get as smooth motion as that hardware display can do. Or in case of e.g. 120 vs 60 Hz with black-frame insertion, the hardware displays an integer multiple frames. But if you do anything else than that, then you get crap motion and/or raster splits. What kind of a use-case needs the "beam racing"?

I love it! :D

yzi: If you are able to update the screen in 1/4 frame increments (which is what beam racing gives you), your emulator only needs to run 1/4th as much code before its output gets gets displayed on screen. So you can get sub-frame latencies.

You can get some of the same effect by having an emulator that's fast enough to be 4x realtime, but that's not always an option.

You can get some of the same effect by having an emulator that's fast enough to be 4x realtime, but that's not always an option.