Gamma correct output for (OpenGL) demos - advice?

category: gfx [glöplog]

I'm in the early stages of working on a demo, and I'd like to get the sRGB/gamma correct output stuff sorted before I get into tweaking lighting and colours too much.

After googling for a bit, it all seems simple in theory, but reality doesn't seem to match. I'm so frustrated at this point I'm hoping someone who's dealt with this in the past can just step in and tell me what's up.

Basically if I just forget about sRGB output and do everything linear, it looks 'fine', but I'm concerned what I'm rendering right now is just too simple for me to tell if anything is incorrect.

All my attempts to output in sRGB have resulted in crazy blown out colours, no matter how simple the use case - e.g. a simple black->white gradient is way too white.

Situation:

- My only concern is the final output to the screen. (Ignore sRGB texture loading etc)

- Not using driver features for this (GL_FRAMEBUFFER_SRGB), my back buffer marked as GL_LINEAR

- Rendering to a linear RGB off-screen buffer

- Using a pixel shader to render off-screen buffer to full screen quad into back buffer. (I figure I can put other effects / colour tweaking in here later)

So, as far as OpenGL is concerned, everything is linear end-to-end, and the only correction is happening inside my shader.

*No* correction looks fine on a simple greyscale gradient. Both gamma 2.2 (pow(c.r, 1.0 / 2.2)), and sRGB (c.r <= 0.00031308 ? 12.92 * c.r : 1.055 * pow(c.r, 1.0 / 2.4) - 0.055) look too bright on the same gradient.

[c.r is red component, repeat for others]

Does this experience sound familiar? Any idea what's going on here? Is Windows correcting it for me later based on monitor calibration stuff? If so presumably on a compo machine the organizers would have done calibration on the projector and no one needs to worry about sRGB output?

BTW platform is nVidia on windows 8.1 and Intel on windows 10. Both behave the same.

Before I went the pixel shader post-process route I also tried to tell OpenGL to output sRGB for me (framebuffer_srgb), and ran into all sorts of similar issues which Google suggested were driver issues at the time - I figured doing it manually would be more portable and flexible in the end?

After googling for a bit, it all seems simple in theory, but reality doesn't seem to match. I'm so frustrated at this point I'm hoping someone who's dealt with this in the past can just step in and tell me what's up.

Basically if I just forget about sRGB output and do everything linear, it looks 'fine', but I'm concerned what I'm rendering right now is just too simple for me to tell if anything is incorrect.

All my attempts to output in sRGB have resulted in crazy blown out colours, no matter how simple the use case - e.g. a simple black->white gradient is way too white.

Situation:

- My only concern is the final output to the screen. (Ignore sRGB texture loading etc)

- Not using driver features for this (GL_FRAMEBUFFER_SRGB), my back buffer marked as GL_LINEAR

- Rendering to a linear RGB off-screen buffer

- Using a pixel shader to render off-screen buffer to full screen quad into back buffer. (I figure I can put other effects / colour tweaking in here later)

So, as far as OpenGL is concerned, everything is linear end-to-end, and the only correction is happening inside my shader.

*No* correction looks fine on a simple greyscale gradient. Both gamma 2.2 (pow(c.r, 1.0 / 2.2)), and sRGB (c.r <= 0.00031308 ? 12.92 * c.r : 1.055 * pow(c.r, 1.0 / 2.4) - 0.055) look too bright on the same gradient.

[c.r is red component, repeat for others]

Does this experience sound familiar? Any idea what's going on here? Is Windows correcting it for me later based on monitor calibration stuff? If so presumably on a compo machine the organizers would have done calibration on the projector and no one needs to worry about sRGB output?

BTW platform is nVidia on windows 8.1 and Intel on windows 10. Both behave the same.

Before I went the pixel shader post-process route I also tried to tell OpenGL to output sRGB for me (framebuffer_srgb), and ran into all sorts of similar issues which Google suggested were driver issues at the time - I figured doing it manually would be more portable and flexible in the end?

Use your final pixel-shader to gammaCorrect! ;)

just put a line of GLSL-code just before you return your color, sth like this works in most cases:

color = pow(c, 1.0 / 2.2);

Here´s a tutorial if you want to make it correct in all cases:

Gamma Correction Tutorial

just put a line of GLSL-code just before you return your color, sth like this works in most cases:

color = pow(c, 1.0 / 2.2);

Here´s a tutorial if you want to make it correct in all cases:

Gamma Correction Tutorial

sorry, didn´t read your post entirely...now i see you had that line already! ;)

dnlb: Sounds like you just need to poke at the frame buffer values and see what's going on. Compare the bytes with reference values. 0.5 should encode to 188, for instance.

In my experience, having correct sRGB output is pretty much the best you can do, and things will look reasonably correct on most screens / projectors.

In my experience, having correct sRGB output is pretty much the best you can do, and things will look reasonably correct on most screens / projectors.

Do the video-card drivers have correction curves? I know I've seen drivers that do, either in Control Panel or in their tray popups.

I did some color science at work many years ago, and, yeah, reality can get grungy :) . Just make sure you're with Poynton instead of the other guy. ;)

I did some color science at work many years ago, and, yeah, reality can get grungy :) . Just make sure you're with Poynton instead of the other guy. ;)

Also, what kusma said.

I just checked, and for Phong in ShaderToy I had to manually 1/2.2 the colors. I am not sure what buffer settings ShaderToy uses, however.

You said the back buffer is linear - is the *front* buffer also linear?

Reminder: 8 bits are not enough for linear. Make sure you are using higher bit depths, unless your demo has a very contoured style!

I just checked, and for Phong in ShaderToy I had to manually 1/2.2 the colors. I am not sure what buffer settings ShaderToy uses, however.

You said the back buffer is linear - is the *front* buffer also linear?

Reminder: 8 bits are not enough for linear. Make sure you are using higher bit depths, unless your demo has a very contoured style!

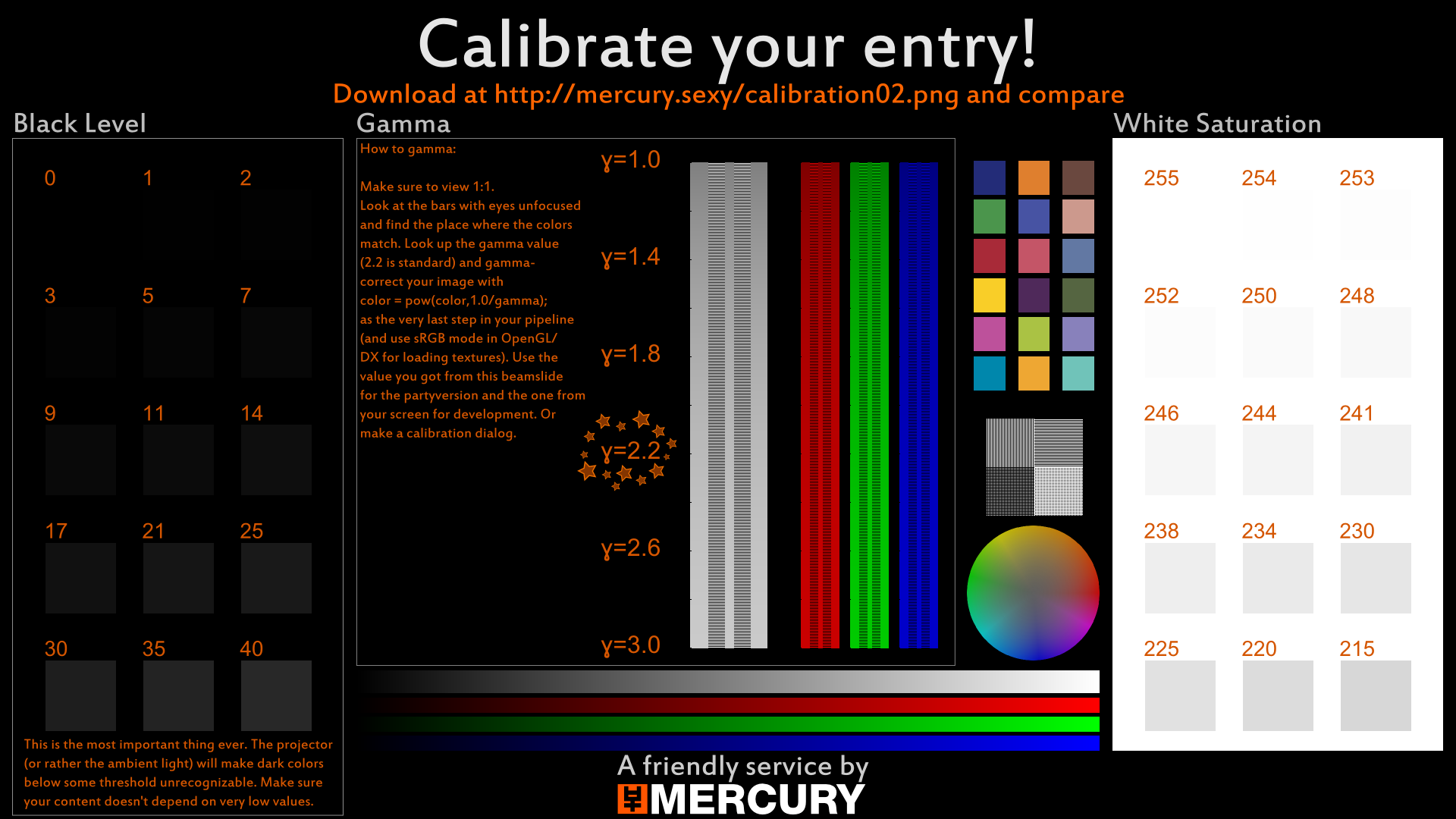

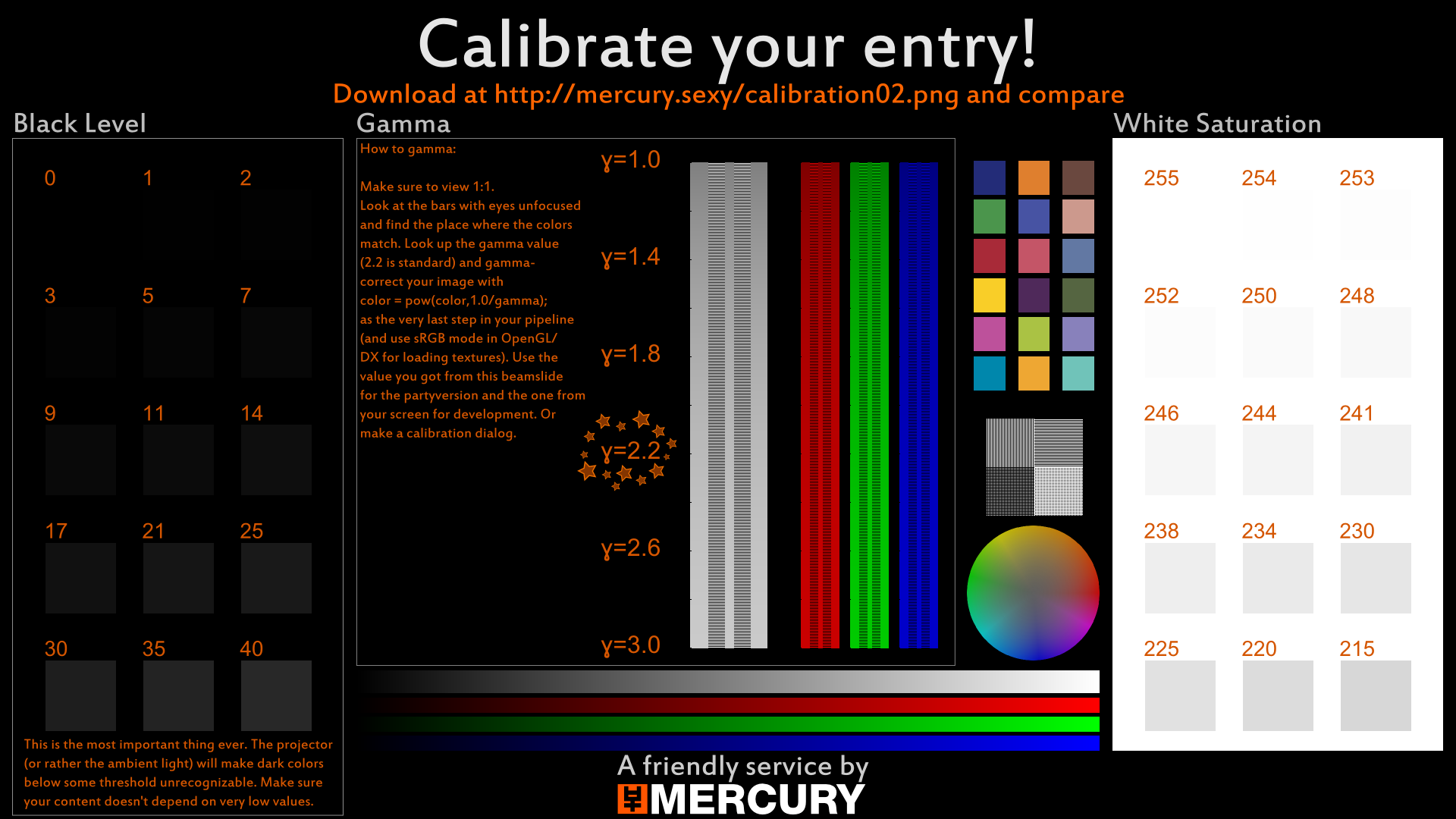

An easy way to check your gamma value is to render a black/white stripe pattern next to a gray ramp, look at your screen from a distance, and compare the gray value that perceptually matches the average of black and white, such as here:

(This is actually intended as a beamslide for demoparties, to get the projector's black value, whitepoint and gamma value).

Using something like this you can easily see whether your output is already gamma-corrected by the driver, or if you still have to do it yourself.

(This is actually intended as a beamslide for demoparties, to get the projector's black value, whitepoint and gamma value).

Using something like this you can easily see whether your output is already gamma-corrected by the driver, or if you still have to do it yourself.

You should probably make a subsite for that so it gets Googleindexed in case a party organizer tries looking for it and googles "mercury calibration beamslide" or something (I know I have done that.)

Mercury calibration beamslide

(links with meaningful names also help Google and friends)

(links with meaningful names also help Google and friends)

Gargaj: https://demozoo.org/graphics/181448/ :)

urs: thanks! Very handy. I like the Macbeth chart too --- happy memories from years gone by.

urs: thanks! Very handy. I like the Macbeth chart too --- happy memories from years gone by.

Saga: you beat me by four seconds! Man... ;)

Never mind... <ducks for cover and leaves thread quietly, wishing pouet had editable posts>

cxw:

The problem with calibration is that you depend on users doing something. They won't. For a party-release, it *might* be worth it, but in hindsight, it was never worth the hassle the few times I did it.

Quote:

All my attempts to output in sRGB have resulted in crazy blown out colours, no matter how simple the use case - e.g. a simple black->white gradient is way too white.

Can you post a screenshot? Note that a gamma corrected linear gradient is supposed to be quite bright, something like this is correct:

Back to the original issue:

If you take your linear 0..1 gradient, then gamma-encode it by applying c= pow(c,1/2.2), you will end up with a gradient that does not look uniform, but instead looks too bright. This is correct and expected. You are good. Everything is fine. Carry on!

To see why, take a few steps back and think about why this gamma encoding is done. Note that this is not gamma calibration. Calibration would be what you might do at your own screen for a game, applying a very small gamma on top of the regular one, like 1.05 or 0.97, as a poor man's eyeballed screen calibration. (I avoid the word "correction" because I think it's misleading)

Gamma encoding, on the other hand, serves to compress data, and nothing more. It's an encoding, like zip compression, that can be done, and then undone, with the problem that the data looks the same and you basically need metadata to tell you if you're in gamma space or not. We need this encoding because we're still living in the 90s basically: we have only 8 bits per color channel in our display cables and file formats (let's keep the HDR discussion out of this thread). Display hardware has to assign each of those 256 values a brightness (a number of photons emitted). Two of those values are fixed: C=0 means no photons (or the lowest amount of photons the hardware can emit, but let's pretend it's 0), C=255 means maximum number of photons. The naive linear assignment would imply that 128 means the double amount of photons compared to 64, and so on. Unfortunately this assignment sucks because 10 gazillion photons look to the human eye exactly like 9.9 gazillion photons, but 0.2 gazillion is totally different from 0.1 gazillion: This is a result of the Weber-Fechner law which states that human perception works on differences relative to the value of a stimulus, i.e. the absolute differences are not what we should care about, it's only all about the relative differences. This means for our naive linear brightness assignment that it spends unnecessary precision on the higher values where we won't even be able to perceive a difference, while the lowest values will look like huge steps and the quantization to 8bit will destroy everything. So, If you gamma-encode your data in your renderer or your digital camera, then transmit it over a display cable or a png file, then gamma-decode it inside the display hardware... yay, perceptually-friendly quantization! And now 8 bits are actually sort-of enough. The screen has an internal lookup table to convert that 8bit value to its own internal linear brightness value, which then drives the pixels.

Now back to what you did: You made a linear gradient, gamma-encoded it, then the screen gamma-decoded it and it was displayed as a linear gradient. And linear gradients look like this! They are not pleasing, they look like there's almost no change in the brighter part and all the change is in the darker part because that's precisely what the Weber-Fechner thing states: we only perceive relative differences. So, all the familiar gradients that we consider/perceive as "linear" are not linear in the sense of photons emitted, but (very roughly) linear in this perceptual space.

This has a few important consequences:

1. Doing any kind of math operations in gamma space (e.g. values as they are read from images files) is always wrong. But people still do it all the time because those numbers seem linear to us. Even photoshop didn't do correct alpha blending (gamma decode both layers, alpha blend, gamma encode result) until 3 years ago or so, and AFAIK it's still not the default. Sad!

2. In a your renderer, the process goes like this: Somehow you render your linear brightness, i.e. a number of photons hitting a pixel in your virtual camera sensor (this idea is basically the heart of physically based rendering). Then, you get to solve the same problem that analog and digital cameras both have to deal with: the dynamic range is huge and we have to get the [0..inf] brightness interval into a [0..1] interval without it looking like crap somehow. This is called tone mapping and over a hundred years of research have gone into chemical films to do this. It is valid to use the identity function as your tone mapper, but that will just clip values. My favourite tonemapping function is the Uncharted2 tonemapping from here - maybe play with those totally arbitrary values a bit. After the tone mapping, we are left with our brightness squashed into physically linear [0..1] interval (same as a printed photograph - it reflects betwen 0 and 100% of the physical light), and now we gamma encode this so it can fit through that narrow displayport cable (and because that's what the screen expects). Bonus points for using sRGB instead of gamma, but that's a very minor detail after getting the big picture right.

3. In your renderer, before doing math on pixel values taken from textures (like... using them in any way, really), make sure that they are in physically linear space. Then do your stuff. Lighting, blending, whatever. Then tonemap and gamma-encode. (Bonus points for dithering before gamma-encoding to help with color banding due to the 8bit quantization.)

4. If you just thought "waaaaiiit, what about bilinear filtering of gamma-encoded pixels from my png textures? This is doing math with pixels, is this bad?" - congratulations, now you are thinking linear :) And yes it is. That's what OpenGL's/DirectX's sRGB mode in textures is for.

5. There's a trap here: if you don't gamma-decode your textures AND if you don't gamma-encode your final output, those two errors can sort of cancel out and your result might look okayish (and fixing only one half will make it look too bright or too dark, so you have to fix both at the same time). But you will have to do all your lighting with horrible hacks that work for just one setting, you will have to tweak everything by hand to make it look presentable, and it will still look like shit. You will have to use thresholds for your bloom and other atrocities that people had to put up with ten or fifteen years ago due to hardware limitations.

(That's also why in photography they use what they call a "18% grey" cardboard piece for calibration - it reflects 18% of the physical light, but appears to us as "half the brightness" of a 100% white piece of cardboard.)

(Side note: The Weber-Fechner law is actually only a coarse approximation for human vision and it's a bit more complex, but who cares.)

If you are really interested in this topic, the Gamma FAQ will leave no questions unanswered (and will probably correct a few things I just said).

If you take your linear 0..1 gradient, then gamma-encode it by applying c= pow(c,1/2.2), you will end up with a gradient that does not look uniform, but instead looks too bright. This is correct and expected. You are good. Everything is fine. Carry on!

To see why, take a few steps back and think about why this gamma encoding is done. Note that this is not gamma calibration. Calibration would be what you might do at your own screen for a game, applying a very small gamma on top of the regular one, like 1.05 or 0.97, as a poor man's eyeballed screen calibration. (I avoid the word "correction" because I think it's misleading)

Gamma encoding, on the other hand, serves to compress data, and nothing more. It's an encoding, like zip compression, that can be done, and then undone, with the problem that the data looks the same and you basically need metadata to tell you if you're in gamma space or not. We need this encoding because we're still living in the 90s basically: we have only 8 bits per color channel in our display cables and file formats (let's keep the HDR discussion out of this thread). Display hardware has to assign each of those 256 values a brightness (a number of photons emitted). Two of those values are fixed: C=0 means no photons (or the lowest amount of photons the hardware can emit, but let's pretend it's 0), C=255 means maximum number of photons. The naive linear assignment would imply that 128 means the double amount of photons compared to 64, and so on. Unfortunately this assignment sucks because 10 gazillion photons look to the human eye exactly like 9.9 gazillion photons, but 0.2 gazillion is totally different from 0.1 gazillion: This is a result of the Weber-Fechner law which states that human perception works on differences relative to the value of a stimulus, i.e. the absolute differences are not what we should care about, it's only all about the relative differences. This means for our naive linear brightness assignment that it spends unnecessary precision on the higher values where we won't even be able to perceive a difference, while the lowest values will look like huge steps and the quantization to 8bit will destroy everything. So, If you gamma-encode your data in your renderer or your digital camera, then transmit it over a display cable or a png file, then gamma-decode it inside the display hardware... yay, perceptually-friendly quantization! And now 8 bits are actually sort-of enough. The screen has an internal lookup table to convert that 8bit value to its own internal linear brightness value, which then drives the pixels.

Now back to what you did: You made a linear gradient, gamma-encoded it, then the screen gamma-decoded it and it was displayed as a linear gradient. And linear gradients look like this! They are not pleasing, they look like there's almost no change in the brighter part and all the change is in the darker part because that's precisely what the Weber-Fechner thing states: we only perceive relative differences. So, all the familiar gradients that we consider/perceive as "linear" are not linear in the sense of photons emitted, but (very roughly) linear in this perceptual space.

This has a few important consequences:

1. Doing any kind of math operations in gamma space (e.g. values as they are read from images files) is always wrong. But people still do it all the time because those numbers seem linear to us. Even photoshop didn't do correct alpha blending (gamma decode both layers, alpha blend, gamma encode result) until 3 years ago or so, and AFAIK it's still not the default. Sad!

2. In a your renderer, the process goes like this: Somehow you render your linear brightness, i.e. a number of photons hitting a pixel in your virtual camera sensor (this idea is basically the heart of physically based rendering). Then, you get to solve the same problem that analog and digital cameras both have to deal with: the dynamic range is huge and we have to get the [0..inf] brightness interval into a [0..1] interval without it looking like crap somehow. This is called tone mapping and over a hundred years of research have gone into chemical films to do this. It is valid to use the identity function as your tone mapper, but that will just clip values. My favourite tonemapping function is the Uncharted2 tonemapping from here - maybe play with those totally arbitrary values a bit. After the tone mapping, we are left with our brightness squashed into physically linear [0..1] interval (same as a printed photograph - it reflects betwen 0 and 100% of the physical light), and now we gamma encode this so it can fit through that narrow displayport cable (and because that's what the screen expects). Bonus points for using sRGB instead of gamma, but that's a very minor detail after getting the big picture right.

3. In your renderer, before doing math on pixel values taken from textures (like... using them in any way, really), make sure that they are in physically linear space. Then do your stuff. Lighting, blending, whatever. Then tonemap and gamma-encode. (Bonus points for dithering before gamma-encoding to help with color banding due to the 8bit quantization.)

4. If you just thought "waaaaiiit, what about bilinear filtering of gamma-encoded pixels from my png textures? This is doing math with pixels, is this bad?" - congratulations, now you are thinking linear :) And yes it is. That's what OpenGL's/DirectX's sRGB mode in textures is for.

5. There's a trap here: if you don't gamma-decode your textures AND if you don't gamma-encode your final output, those two errors can sort of cancel out and your result might look okayish (and fixing only one half will make it look too bright or too dark, so you have to fix both at the same time). But you will have to do all your lighting with horrible hacks that work for just one setting, you will have to tweak everything by hand to make it look presentable, and it will still look like shit. You will have to use thresholds for your bloom and other atrocities that people had to put up with ten or fifteen years ago due to hardware limitations.

(That's also why in photography they use what they call a "18% grey" cardboard piece for calibration - it reflects 18% of the physical light, but appears to us as "half the brightness" of a 100% white piece of cardboard.)

(Side note: The Weber-Fechner law is actually only a coarse approximation for human vision and it's a bit more complex, but who cares.)

If you are really interested in this topic, the Gamma FAQ will leave no questions unanswered (and will probably correct a few things I just said).

Quote:

- Not using driver features for this (GL_FRAMEBUFFER_SRGB), my back buffer marked as GL_LINEAR

Why tho? glEnable(GL_FRAMEBUFFER_SRGB) is literally a one line fix to this without remembering to do it by hand each time and it gives the actual sRGB space unlike the pow(c,1/2.2) thing. If the latter point ever happens to matter.

Thanks everyone for your responses! :)

I'm not at my computer right now, but later tonight I will take a screen shot to post here, and will also sample its precise colour values to see if they match the expected. (I'd convinced myself even a screen shot couldn't be trusted to reflect actual monitor output, but better to check anyway.)

@absence: indeed, the gradient you posted is the same as what I got. So if that's correct, that's great! It just didn't make intuitive sense to me...

Also I was misled by pages like this one, where in the "Light emission vs perceptual brightness" section that guy posts the two ramps and, maybe with a bad assumption on my part, I jumped to the conclusion that it was saying the more visually pleasing one was 'right'.

@cupe: thanks a lot for your massive post!

I knew about the gamma-as-compression thing but I'd not put all the pieces together. This was the key sentence that made me understand:

So basically, the *right* gradient looks wrong because of crappy human meat-eyes and me not being accustomed to seeing a real phyically accurate gradient because they don't exist in reality.

Is the tone-mapping step required to get remotely sane looking results on simple scenes? Or, knowing that my output is probably gamma correct now, should I just go try and tweak lights so everything isn't so damn bright?

@msqrt: I'm doing it in a shader right now because I implemented first using the built in sRGB framebuffer functionality, it looked 'wrong' (even though I know now it was probably right), and I was worried about driver issues so I figured better just to eliminate the driver-specific part for my own sanity.

Maybe it's safe to put it back now? I dunno if it's worth the spectre of "what if the driver does sRGB writes to my linear texture buffer even though the spec says it shouldn't" thing hanging over me right now though.

Re: the stripe+ramp pattern on the beamslide, if that pattern is already being fed through gamma correction, should the greys converge at 1.0?

E.g. I look at the slide on my monitor, it points at 2.1, so I encode my output with pow(c, 1/2.1) - if I then feed the pattern through that output again should I get convergence at 1.0?

If so that's a very easy way for me to check if this is working.

I'm not at my computer right now, but later tonight I will take a screen shot to post here, and will also sample its precise colour values to see if they match the expected. (I'd convinced myself even a screen shot couldn't be trusted to reflect actual monitor output, but better to check anyway.)

@absence: indeed, the gradient you posted is the same as what I got. So if that's correct, that's great! It just didn't make intuitive sense to me...

Also I was misled by pages like this one, where in the "Light emission vs perceptual brightness" section that guy posts the two ramps and, maybe with a bad assumption on my part, I jumped to the conclusion that it was saying the more visually pleasing one was 'right'.

@cupe: thanks a lot for your massive post!

I knew about the gamma-as-compression thing but I'd not put all the pieces together. This was the key sentence that made me understand:

Quote:

You made a linear gradient, gamma-encoded it, then the screen gamma-decoded it and it was displayed as a linear gradient. And linear gradients look like this! They are not pleasing, they look like there's almost no change in the brighter part and all the change is in the darker part because that's precisely what the Weber-Fechner thing states: we only perceive relative differences. So, all the familiar gradients that we consider/perceive as "linear" are not linear in the sense of photons emitted, but (very roughly) linear in this perceptual space.

So basically, the *right* gradient looks wrong because of crappy human meat-eyes and me not being accustomed to seeing a real phyically accurate gradient because they don't exist in reality.

Is the tone-mapping step required to get remotely sane looking results on simple scenes? Or, knowing that my output is probably gamma correct now, should I just go try and tweak lights so everything isn't so damn bright?

@msqrt: I'm doing it in a shader right now because I implemented first using the built in sRGB framebuffer functionality, it looked 'wrong' (even though I know now it was probably right), and I was worried about driver issues so I figured better just to eliminate the driver-specific part for my own sanity.

Maybe it's safe to put it back now? I dunno if it's worth the spectre of "what if the driver does sRGB writes to my linear texture buffer even though the spec says it shouldn't" thing hanging over me right now though.

Re: the stripe+ramp pattern on the beamslide, if that pattern is already being fed through gamma correction, should the greys converge at 1.0?

E.g. I look at the slide on my monitor, it points at 2.1, so I encode my output with pow(c, 1/2.1) - if I then feed the pattern through that output again should I get convergence at 1.0?

If so that's a very easy way for me to check if this is working.

Here's the screenshot of the ramp rendered by my program, with a mark at 50%:

50% is indeed 188.

I also calibrated my monitor using the Mercury beamslide, and everything looks better :D

(Quite a bit darker, it turns out)

When I import the beamslide and display it in my engine, it looks exactly the same as it does in the web browser. I.e. after adjustment, convergence at 2.2. Which makes sense I guess - ignore my question above about it being on 1.0.

So it looks like we're good! Sometimes you just need someone to tell you, "that's normal" I guess.

50% is indeed 188.

I also calibrated my monitor using the Mercury beamslide, and everything looks better :D

(Quite a bit darker, it turns out)

When I import the beamslide and display it in my engine, it looks exactly the same as it does in the web browser. I.e. after adjustment, convergence at 2.2. Which makes sense I guess - ignore my question above about it being on 1.0.

So it looks like we're good! Sometimes you just need someone to tell you, "that's normal" I guess.

Quote:

So basically, the *right* gradient looks wrong because of crappy human meat-eyes and me not being accustomed to seeing a real phyically accurate gradient because they don't exist in reality.

Basically, yes. :) It's actually due to evolutionary efficiency rather than being crappy. The compression increases performance in low light. It's the same with sound. If you fade out the physical pressure waves linearly, it will sound abrupt rather than like an even fade.

(Can't get dropbox image link to work, right click and copy link address to see it I guess...)

Lighting in linear is the physically correct thing to do, but saying that anything else "will still look like shit" is not right either.

The fun fact of gamma: It doesn't look correct at first sight. And if you render a gradient, the gamma corrected version looks somehow wrong. Congrats, you've done it correctly. Gradients are the most-shitty-thing-to-use[tm] to check if everything is right. Somehow gamma corrected gradients tend to have this "white look" inbetween, especially for pure colors. Draw a gradient from pink to yellow and you see what I mean.

And as a side note: That PBR shiznat. Without a good approximation of the surrounding environmental lighting (image based or whatever) it will look crap. Even good old Blinn-Phong can look better if you fake energy conservation and slap some blurry perlin cubemap on it. It's simple, if you have a ball made of a pefect reflective material, a white light and no envrionmental lighting aproximation, you get a black ball with white specular on screen... correct but boring.

Disclaimer:

1. I'm drunk

2. see 1.

3. Abductees mudda

4. I approximate Fresnel with smoothstep

5. No, I don't hate PBR

Just my two cent of bullshit.

And as a side note: That PBR shiznat. Without a good approximation of the surrounding environmental lighting (image based or whatever) it will look crap. Even good old Blinn-Phong can look better if you fake energy conservation and slap some blurry perlin cubemap on it. It's simple, if you have a ball made of a pefect reflective material, a white light and no envrionmental lighting aproximation, you get a black ball with white specular on screen... correct but boring.

Code:

diffuse *= 1.0 - specular; // It's fucking magic... now let's cheat!

Disclaimer:

1. I'm drunk

2. see 1.

3. Abductees mudda

4. I approximate Fresnel with smoothstep

5. No, I don't hate PBR

Just my two cent of bullshit.

Okay... I'm warmed up now. While we are at at, without a HDR monitor it's crap anyways - welcome to banding land. And then someone slaps on "filmic" noise - I could puke. Filmic, really? That feels like me 20 years ago - in case of doubt, add layers and layers of noise. Even with 10 bits per channel or 16 bit float - whatever pefect rendering your have in the backbuffer - 99.9% of all monitors suck when it comes to sRGB range... even worse on laptop LG bullshit panels.

I bet my ass, there is a color profile mafia.

I bet my ass, there is a color profile mafia.

Okay, last but not least:

Some copy-and-paste[tm] tonemapping operators you want to play with have the gamma factored in... and some peoples math is not good enough (like mine) or they are to lazy (like me) to factor it out...

Hopefully I'm done now.

Quote:

Quote:- Not using driver features for this (GL_FRAMEBUFFER_SRGB), my back buffer marked as GL_LINEAR

Why tho? glEnable(GL_FRAMEBUFFER_SRGB) is literally a one line fix to this without remembering to do it by hand each time and it gives the actual sRGB space unlike the pow(c,1/2.2) thing. If the latter point ever happens to matter.

Some copy-and-paste[tm] tonemapping operators you want to play with have the gamma factored in... and some peoples math is not good enough (like mine) or they are to lazy (like me) to factor it out...

Hopefully I'm done now.